GANs

Reference

- Check this kaggle competition

- Fast.ai decrappify & DeOldify

Applications:

- Image to image problems

- Super Resolution

- Black and white colorization

- Colorful Image Colorization 2016

- DeOldify 2018, SotA

- Decrappification

- Artistic style

- Data augmentation:

- New images

- From latent vector

- From noise image

Training

- Generate labeled dataset

- Edit ground truth images to become the input images.

- This step depend of the problem: input data could be crappified, black & white, noise, vector …

- Train the GENERATOR (most of the time)

- Model: UNET with pretrained ResNet backbone + self attention + spectral normalization

- Loss: Mean squared pixel error or L1 loss

- Better Loss: Perceptual Loss (aka Feature Loss)

- Save generated images.

- Train the DISCRIMINATOR (aka Critic) with real vs generated images.

- Model: Pretrained binary classifier + spectral normalization

- Train BOTH nets (ping-pong) with 2 losses (original and discriminator).

- With a NoGAN approach, this step is very quick (a 5% of the total training time, more o less)

- With a traditional progressively-sized GAN approach, this step is very slow.

- If train so much this step, you start seeing artifacts and glitches introduced in renderings.

Tricks

- Self-Attention GAN (SAGAN): For spatial coherence between regions of the generated image

- Spectral normalization

- Video

- pix2pixHD

- COVST: Naively add temporal consistency.

- Video-to-Video Synthesis

GANs (order chronologically)

| Paper | Name | Date | Creator |

|---|---|---|---|

| GAN | Generative Adversarial Net | Jun 2014 | Goodfellow |

| CGAN | Conditional GAN | Nov 2014 | Montreal U. |

| DCGAN | Deep Convolutional GAN | Nov 2015 | |

| GAN v2 | Improved GAN | Jun 2016 | Goodfellow |

| InfoGAN | Jun 2016 | OpenAI | |

| CoGAN | Coupled GAN | Jun 2016 | Mitsubishi |

| Pix2Pix | Image to Image | Nov 2016 | Berkeley |

| StackGAN | Text to Image | Dec 2016 | Baidu |

| WGAN | Wasserstein GAN | Jan 2017 | |

| CycleGAN | Cycle GAN | Mar 2017 | Berkeley |

| ProGAN | Progressive growing of GAN | Oct 2017 | NVIDIA |

| SAGAN | Self-Attention GAN | May 2018 | Goodfellow |

| BigGAN | Large Scale GAN Training | Sep 2018 | |

| StyleGAN | Style-based GAN | Dec 2018 | NVIDIA |

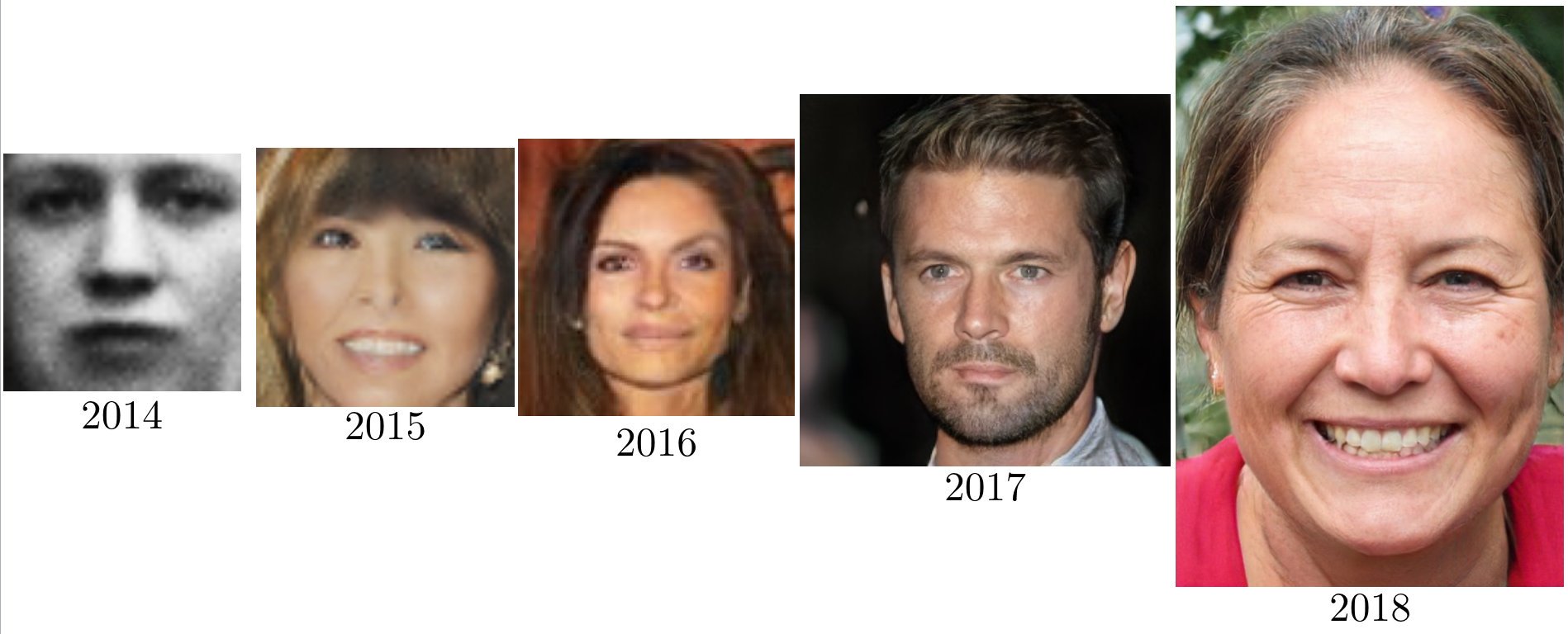

2014 (GAN) → 2015 (DCGAN) → 2016 (CoGAN) → 2017 (ProGAN) → 2018 (StyleGAN)

GANS (order by type)

- Better error function

- LSGAN https://arxiv.org/abs/1611.04076

- RaGAN https://arxiv.org/abs/1807.00734

- GAN v2 (Feature Matching) https://arxiv.org/abs/1606.03498

- CGAN: Only one particular class generation (instead of blurry multiclass).

- InfoGAN: Disentaged representation (Dec. 2016, OpenAI)

- CycleGAN: Domain adaptation (Oct. 2017, Berkeley)

- SAGAN: Self-Attention GAN (May. 2018, Google)

- Relativistic GAN: Rethinking adversary (Jul. 2018, LD Isntitute)

- Progressive GAN: One step at a time (Oct 2017, NVIDIA)

- DCGAN: Deep Convolutional GAN (Nov. 2016, Facebook)

- BigGAN: SotA for image synthesis. Same GAN techiques, but larger. Increase model capacity & batch size.

- BEGAN: Balancing Generator (May. 2017, Google)

- WGAN: Wasserstein GAN. Learning distribution (Dec. 2017, Facebook)

- VAEGAN: Improving VAE by GANs (Feb. 2016, TU Denmark)

- SeqGAN: Sequence learning with GANs (May 2017, Shangai Univ.)